Lets imagine a 1000 mile road trip on an interstate that had no speed limit. In a gasoline powered car, you will always arrive at your destination sooner the faster you drive. You'll have to stop for fuel more frequently if you drive faster, but considering how quick it is to refuel, there is no practical limit to your speed with respect to arriving at the destination sooner.

Now consider the same trip in the Bolt. It takes longer to recharge, and the closer to full the battery becomes, the slower the recharge. Driving 100 MPH on this trip likely wouldn't get you to the destination quicker than driving 65 MPH considering the extra stops needed to recharge.

Has anyone done the math to determine the optimal speed vs efficiency to arrive at a long distance destination the quickest? What does that formula look like, especially given the non-linear charging characteristics?

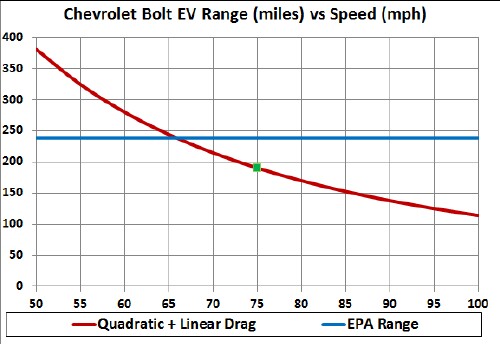

This graph is what got me thinking about the topic:

Some very rough estimates based on this graph show that traveling at 70 MPH will require 5 full charges (1000 mi distance / 200 miles per charge). At 100 MPH, you would need 10 full charges (1000 mi distance / 100 miles per charge). At 70 MPH, it would take about 14hrs 17min of driving to cover 1000 miles. At 100 MPH, it would take 10hrs, for a difference of 4hrs 17min.

It would certainly take more than 4hrs 17min to get 5 more full charges, so clearly traveling at 100 MPH on a long road trip wouldn't get you to the destination quicker than traveling at 70 MPH.

What is the optimal speed given this scenario?

To complicate the question, should the vehicle be charged to full each time, or is it more time efficient to only charge to a certain percentage, and make more frequent charging stops?

Now consider the same trip in the Bolt. It takes longer to recharge, and the closer to full the battery becomes, the slower the recharge. Driving 100 MPH on this trip likely wouldn't get you to the destination quicker than driving 65 MPH considering the extra stops needed to recharge.

Has anyone done the math to determine the optimal speed vs efficiency to arrive at a long distance destination the quickest? What does that formula look like, especially given the non-linear charging characteristics?

This graph is what got me thinking about the topic:

Some very rough estimates based on this graph show that traveling at 70 MPH will require 5 full charges (1000 mi distance / 200 miles per charge). At 100 MPH, you would need 10 full charges (1000 mi distance / 100 miles per charge). At 70 MPH, it would take about 14hrs 17min of driving to cover 1000 miles. At 100 MPH, it would take 10hrs, for a difference of 4hrs 17min.

It would certainly take more than 4hrs 17min to get 5 more full charges, so clearly traveling at 100 MPH on a long road trip wouldn't get you to the destination quicker than traveling at 70 MPH.

What is the optimal speed given this scenario?

To complicate the question, should the vehicle be charged to full each time, or is it more time efficient to only charge to a certain percentage, and make more frequent charging stops?